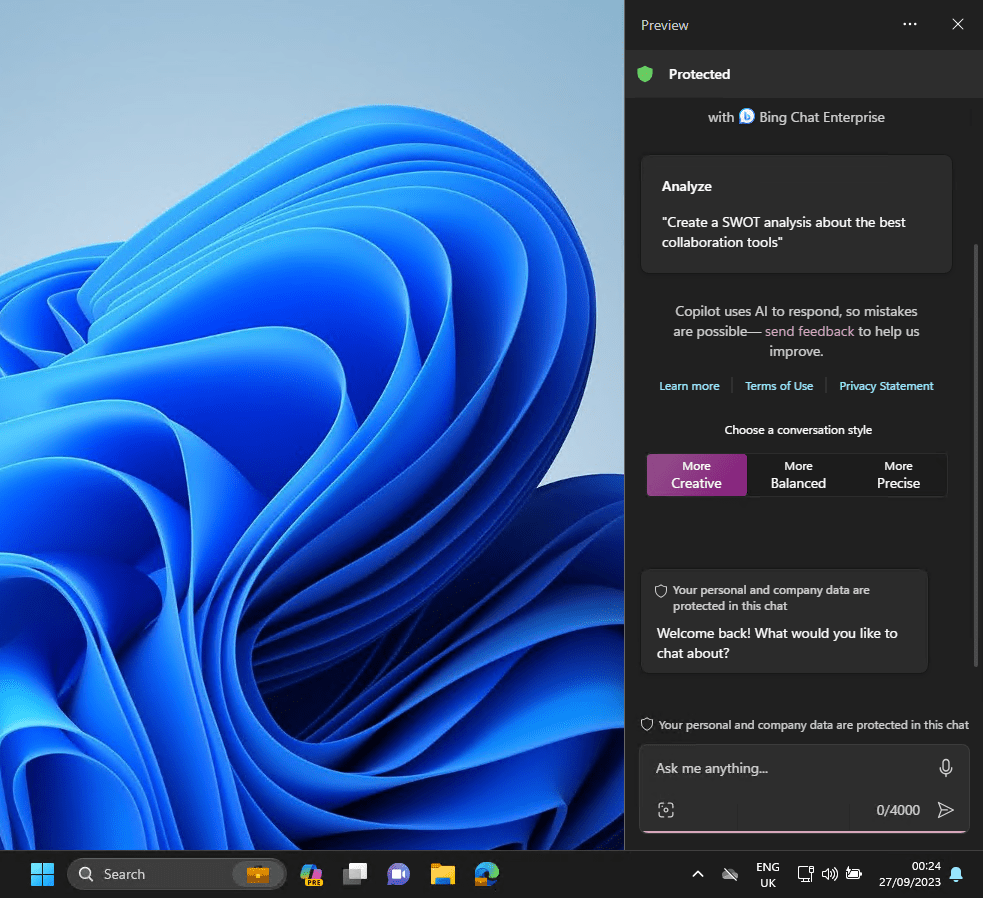

Since Microsoft 365 Copilot landed, I’ve had many conversations with businesses who are anywhere from just starting on their journey with it to being advanced users. I have listed here some common misconceptions I hear about data and M365 Copilot. Most of them boil down to one thing, misunderstanding what Copilot actually is and how it works under the bonnet.

In this post I talk about the paid license M365 Copilot that is typically used by businesses, not the free, personal Microsoft Copilot.

So let’s clear the air. Here are four common misconceptions I’ve heard, and the real story behind each one.

Misconception 1: “When I buy Copilot, I get my own private AI model”

Nope, that’s not how it works.

When you license Copilot, you’re not spinning up your own personal GPT instance tucked away in a private server. What you’re actually getting is secure access to a shared large language model hosted in Microsoft’s cloud. Think of it like renting a lane on a motorway, you’re using the same infrastructure as everyone else, but your data stays in your own vehicle.

Microsoft’s architecture is designed to keep your data isolated. Your prompts and context are processed securely, and your content is never used to train the model. So while the model is shared, your data isn’t.

Misconception 2: “My data never leaves my tenant”

This one’s a bit trickier. Your data does reside in your tenant, but when you use Copilot, the relevant bits of it are sent to Microsoft’s cloud-based AI models for processing.

That means your prompt and the context Copilot gathers, emails, files, chats and so on, are securely transmitted to the nearest available AI compute cluster. If that cluster’s busy, your data might be routed to another Microsoft datacentre, possibly in another country. But if you’re in the EU, Microsoft guarantees that your data won’t leave the EU boundary.

So yes, your data leaves your tenant, but it stays within Microsoft’s secure cloud, and within the rules.

Misconception 3: “Copilot can access all ‘Anyone’ share links in my organisation”

Not quite.

An ‘Anyone’ link, the kind that lets anyone with the link view a file, doesn’t automatically make that file searchable. For Copilot to surface content from an ‘Anyone’ link, the user must have redeemed the link, meaning they’ve clicked it and accessed the file.

Until that happens, Copilot treats the file as off-limits. It operates strictly within the context of the user’s permissions. So if you haven’t clicked the link, Copilot won’t see it, even if the link exists somewhere in your tenant.

Also worth noting, ‘Anyone’ links are risky. They’re essentially unauthenticated access tokens. Anyone can forward them, and there’s no audit trail. Use them sparingly.

Misconception 4: “Copilot only sees my data if I attach it to the prompt”

Wrong again.

Copilot doesn’t wait for you to upload a document or paste in a paragraph. It automatically pulls in any content you already have access to, emails, OneDrive files, SharePoint docs, Teams chats, calendar entries, the lot.

This is called grounding. When you ask Copilot a question, it searches your Microsoft 365 environment for relevant context, then sends that along with your prompt to the AI model. If you’ve got access to a file that answers your question, Copilot will find it, no need to attach anything manually.

That’s why data access controls are so important. If a user has access to sensitive content, Copilot can use that content in its responses. It won’t override permissions, but it will amplify whatever the user can already see.

Final Thoughts

M365 Copilot is powerful, but it’s not magic. It works within the boundaries of Microsoft 365’s architecture, permissions and security model. Understanding those boundaries is key to using it safely and effectively.

If you’re rolling out Copilot in your organisation, make sure your users understand what it can and can’t do and make sure you know how to protect your data.